Virtualization:-

Virtualization is a form of technology that has been around for a few years now, but has only recently began to make its way into the everyday vocabulary of those involved in the IT industry. Virtualization itself can help to radically transform a company’s infrastructure and can help to improve a company’s overall efficiency.

In computing, virtualization means to create a virtual version of a device or resource, such as a server, storage device, network or even an operating system where the framework divides the resource into one or more execution environments. Even something as simple as partitioning a hard drive is considered virtualization because you take one drive and partition it to create two separate hard drives. Devices, applications and human users are able to interact with the virtual resource as if it were a real single logical resource.

Virtualization In Red Hat:-

Virtualization is technology that lets you create useful IT services using resources that are traditionally bound to hardware. It allows you to use a physical machine’s full capacity by distributing its capabilities among many users or environments.

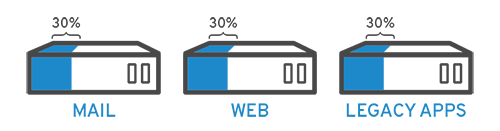

Traditionally, yes. It was often easier and more reliable to run individual tasks on individual servers: 1 server, 1 operating system, 1 task. It wasn’t easy to give 1 server multiple brains. But with virtualization, you can split the mail server into 2 unique ones that can handle independent tasks so the legacy apps can be migrated. It’s the same hardware, you’re just using more of it more efficiently.

A brief history of virtualization

While virtualization technology can be sourced back to the 1960s, it wasn’t widely adopted until the early 2000s. The technologies that enabled virtualization—like hypervisors—were developed decades ago to give multiple users simultaneous access to computers that performed batch processing. Batch processing was a popular computing style in the business sector that ran routine tasks thousands of times very quickly (like payroll).

But, over the next few decades, other solutions to the many users/single machine problem grew in popularity while virtualization didn’t. One of those other solutions was time-sharing, which isolated users within operating systems—inadvertently leading to other operating systems like UNIX, which eventually gave way to Linux®. All the while, virtualization remained a largely unadopted, niche technology.

Fast forward to the the 1990s. Most enterprises had physical servers and single-vendor IT stacks, which didn’t allow legacy apps to run on a different vendor’s hardware. As companies updated their IT environments with less-expensive commodity servers, operating systems, and applications from a variety of vendors, they were bound to underused physical hardware—each server could only run 1 vendor-specific task.

This is where virtualization really took off. It was the natural solution to 2 problems: companies could partition their servers and run legacy apps on multiple operating system types and versions. Servers started being used more efficiently (or not at all), thereby reducing the costs associated with purchase, set up, cooling, and maintenance.

Virtualization’s widespread applicability helped reduce vendor lock-in and made it the foundation of cloud computing. It’s so prevalent across enterprises today that specialized virtualization management software is often needed to help keep track of it all.

How does virtualization work?

Software called hypervisors separate the physical resources from the virtual environments—the things that need those resources. Hypervisors can sit on top of an operating system (like on a laptop) or be installed directly onto hardware (like a server), which is how most enterprises virtualize. Hypervisors take your physical resources and divide them up so that virtual environments can use them.

Resources are partitioned as needed from the physical environment to the many virtual environments. Users interact with and run computations within the virtual environment (typically called a guest machine or virtual machine). The virtual machine functions as a single data file. And like any digital file, it can be moved from one computer to another, opened in either one, and be expected to work the same.

When the virtual environment is running and a user or program issues an instruction that requires additional resources from the physical environment, the hypervisor relays the request to the physical system and caches the changes—which all happens at close to native speed (particularly if the request is sent through an open source hypervisor based on KVM, the Kernel-based Virtual Machine).

Types of virtualization

Hardware virtualization

Hardware virtualization is the virtualization of computers as complete hardware platforms, certain logical abstractions of their componentry, or only the functionality required to run various operating systems. Virtualization hides the physical characteristics of a computing platform from the users, presenting instead an abstract computing platform.[1][2] At its origins, the software that controlled virtualization was called a "control program", but the terms "hypervisor" or "virtual machine monitor" became preferred over time.[3]

Hardware virtualization or platform virtualization refers to the creation of a virtual machine that acts like a real computer with an operating system. Software executed on these virtual machines is separated from the underlying hardware resources. For example, a computer that is running Microsoft Windows may host a virtual machine that looks like a computer with the Ubuntu Linux operating system; Ubuntu-based software can be run on the virtual machine.[2][3]

Desktop virtualization

Desktop virtualization is software technology that separates the desktop environment and associated application software from the physical client device that is used to access it.

Desktop virtualization can be used in conjunction with application virtualization and user profile management systems, now termed "user virtualization," to provide a comprehensive desktop environment management system. In this mode, all the components of the desktop are virtualized, which allows for a highly flexible and much more secure desktop delivery model. In addition, this approach supports a more complete desktop disaster recovery strategy as all components are essentially saved in the data center and backed up through traditional redundant maintenance systems. If a user's device or hardware is lost, the restore is straightforward and simple, because the components will be present at login from another device. In addition, because no data are saved to the user's device, if that device is lost, there is much less chance that any critical data can be retrieved and compromised.

OS-level virtualisation

OS-level virtualization refers to an operating system paradigm in which the kernel allows the existence of multiple isolated user-space instances. Such instances, called containers(Solaris, Docker), Zones (Solaris), virtual private servers (OpenVZ), partitions, virtual environments (VEs), virtual kernel (DragonFly BSD) or jails (FreeBSD jail or chroot jail),[1] may look like real computers from the point of view of programs running in them. A computer program running on an ordinary operating system can see all resources (connected devices, files and folders, network shares, CPU power, quantifiable hardware capabilities) of that computer. However, programs running inside of a container can only see the container's contents and devices assigned to the container.

On Unix-like operating systems, this feature can be seen as an advanced implementation of the standard chroot mechanism, which changes the apparent root folder for the current running process and its children. In addition to isolation mechanisms, the kernel often provides resource-management features to limit the impact of one container's activities on other containers.

The term "container," while most popularly referring to OS-level virtualization systems, is sometimes ambiguously used to refer to fuller virtual machine environments operating in varying degrees of concert with the host OS, e.g. Microsoft's "Hyper-V Containers."

Cloud computing

Cloud computing is the on-demand availability of computer system resources, especially data storage and computing power, without direct active management by the user. The term is generally used to describe data centers available to many users over the Internet. Large clouds, predominant today, often have functions distributed over multiple locations from central servers. If the connection to the user is relatively close, it may be designated an edge server.

Clouds may be limited to a single organization (enterprise clouds[1][2]), be available to many organizations (public cloud), or a combination of both (hybrid cloud).

Cloud computing relies on sharing of resources to achieve coherence and economies of scale.

Advocates of public and hybrid clouds note that cloud computing allows companies to avoid or minimize up-front IT infrastructure costs. Proponents also claim that cloud computing allows enterprises to get their applications up and running faster, with improved manageability and less maintenance, and that it enables IT teams to more rapidly adjust resources to meet fluctuating and unpredictable demand.[2][3][4]Cloud providers typically use a "pay-as-you-go" model, which can lead to unexpected operating expenses if administrators are not familiarized with cloud-pricing models.[5]

The availability of high-capacity networks, low-cost computers and storage devices as well as the widespread adoption of hardware virtualization, service-oriented architecture, and autonomic and utility computing has led to growth in cloud computing.[6][7][8]

Cloud computing is a general term for anything that involves delivering hosted services over the Internet. These services are broadly divided into three categories: Infrastructure-as-a-Service (IaaS), Platform-as-a-Service (PaaS) and Software-as-a-Service (SaaS). The name cloud computing was inspired by the cloud symbol that's often used to represent the Internet in flowcharts and diagrams.

Difference Between Cloud Computing and Virtualization:-

At first glance, virtualizationand cloud computing may sound like similar things, but each one has a broader definition that can be applied to many different kinds of systems. Both virtualization and cloud computing are often virtual in the sense that they rely on similar models and principles. However, cloud computing and virtualization are inherently different.

Within this broad definition, there are specific types of virtualization, such as virtual storage devices, virtual machines, virtual operating systems and virtual network components for network virtualization. Virtualization just means that someone built a model for something, such as a machine or server, into code, creating a software program function that acts like what it’s modeling. For instance, a virtual server sends and receives signals just like a physical one, even though it doesn’t have its own circuitry and other physical components.

Network virtualization is the closest type of virtualization to the kinds of setups known as cloud computing. In network virtualization, individual servers and other components are replaced by logical identifiers, rather than physical hardwarepieces. For example, a virtual machine is a software representation of a computer, rather than an actual computer. Network virtualization is used for testing environments as well as actual network implementation.

Cloud computing, on the other hand, is a specific kind of IT setup that involves multiple computers or hardware pieces sending data through a wireless or IP-connected network. In most cases, cloud computing environments involve sending inputted data to remote locations through a somewhat abstract network trajectory known as "the cloud." With the popularity of cloud computing services, more and more people are understanding the cloud as a storage environment supplied by vendors that assume responsibility for data and archive security.

In short, cloud computing is a reference to specific kinds of vendor-provided network setups, where virtualization is the more general process of replacing tangible devices and controls with a system where software manages more of a network’s processes.

Thank You!

No comments:

Post a Comment